Steve Nadis

Feb 4, 2024 8:00 AM

How one can Assure the Security of Autonomous Automobiles

The unique model ofthis storyappeared in Quanta Journal.

Driverless vehicles and planes are not the stuff of the longer term. Within the metropolis of San Francisco alone, two taxi corporations have collectively logged 8 million miles of autonomous driving via August 2023. And greater than 850,000 autonomous aerial automobiles, or drones, are registered in the US—not counting these owned by the army.

However there are professional issues about security. For instance, in a 10-month interval that led to Could 2022, the Nationwide Freeway Site visitors Security Administration reported almost 400 crashes involving vehicles utilizing some type of autonomous management. Six individuals died because of these accidents, and 5 had been critically injured.

The same old approach of addressing this problem—typically referred to as “testing by exhaustion”—entails testing these programs till you’re happy they’re protected. However you may by no means make certain that this course of will uncover all potential flaws. “Folks perform exams till they’ve exhausted their sources and endurance,” mentioned Sayan Mitra, a pc scientist on the College of Illinois, Urbana-Champaign. Testing alone, nonetheless, can’t present ensures.

Mitra and his colleagues can. His group has managed to show the protection of lane-tracking capabilities for vehicles and touchdown programs for autonomous plane. Their technique is now getting used to assist land drones on plane carriers, and Boeing plans to check it on an experimental plane this 12 months. “Their technique of offering end-to-end security ensures is essential,” mentioned Corina Pasareanu, a analysis scientist at Carnegie Mellon College and NASA’s Ames Analysis Middle.

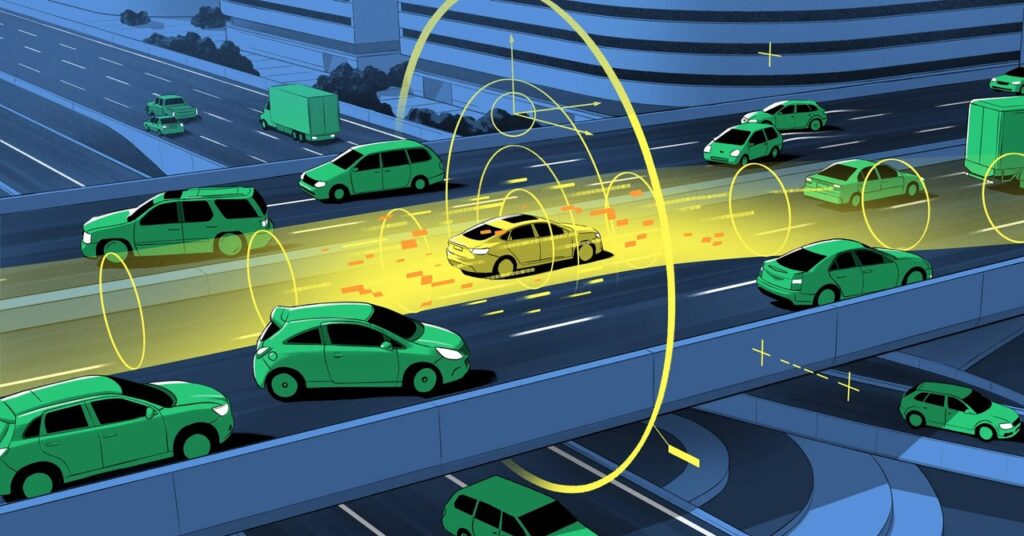

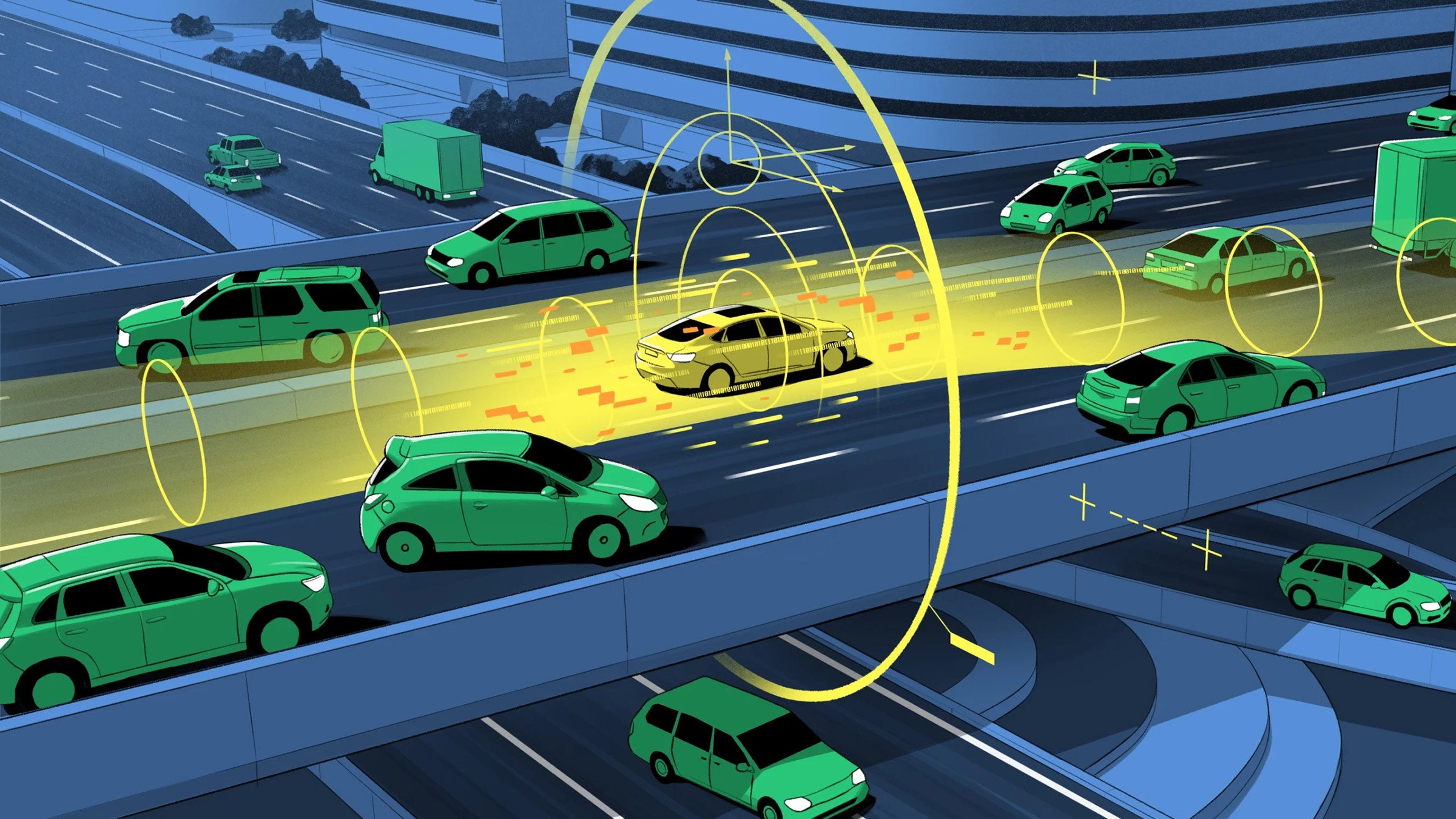

Their work entails guaranteeing the outcomes of the machine-learning algorithms which might be used to tell autonomous automobiles. At a excessive stage, many autonomous automobiles have two elements: a perceptual system and a management system. The notion system tells you, as an illustration, how far your automotive is from the middle of the lane, or what path a aircraft is heading in and what its angle is with respect to the horizon. The system operates by feeding uncooked knowledge from cameras and different sensory instruments to machine-learning algorithms based mostly on neural networks, which re-create the setting exterior the automobile.

These assessments are then despatched to a separate system, the management module, which decides what to do. If there’s an upcoming impediment, as an illustration, it decides whether or not to use the brakes or steer round it. Based on Luca Carlone, an affiliate professor on the Massachusetts Institute of Know-how, whereas the management module depends on well-established know-how, “it’s making choices based mostly on the notion outcomes, and there’s no assure that these outcomes are right.”

To supply a security assure, Mitra’s group labored on guaranteeing the reliability of the automobile’s notion system. They first assumed that it’s attainable to ensure security when an ideal rendering of the skin world is obtainable. They then decided how a lot error the notion system introduces into its re-creation of the automobile’s environment.

The important thing to this technique is to quantify the uncertainties concerned, referred to as the error band—or the “identified unknowns,” as Mitra put it. That calculation comes from what he and his group name a notion contract. In software program engineering, a contract is a dedication that, for a given enter to a pc program, the output will fall inside a specified vary. Determining this vary isn’t straightforward. How correct are the automotive’s sensors? How a lot fog, rain, or photo voltaic glare can a drone tolerate? However should you can maintain the automobile inside a specified vary of uncertainty, and if the dedication of that vary is sufficiently correct, Mitra’s group proved that you may guarantee its security.

-

[wpcc-element _tag=”source” media=”(max-width: 767px)” srcset=”https://media.wired.com/photos/65bd7e2524c06ba3ede91a33/16:9/w_120,h_450,c_limit/undefined 120w, https://media.wired.com/photos/65bd7e2524c06ba3ede91a33/16:9/w_240,h_450,c_limit/undefined 240w, https://media.wired.com/photos/65bd7e2524c06ba3ede91a33/16:9/w_320,h_450,c_limit/undefined 320w, https://media.wired.com/photos/65bd7e2524c06ba3ede91a33/16:9/w_640,h_450,c_limit/undefined 640w” sizes=”100vw” _close=”0″][wpcc-element _tag=”source” media=”(min-width: 768px)” srcset=”https://media.wired.com/photos/65bd7e2524c06ba3ede91a33/16:9/w_120,h_450,c_limit/undefined 120w, https://media.wired.com/photos/65bd7e2524c06ba3ede91a33/16:9/w_240,h_450,c_limit/undefined 240w, https://media.wired.com/photos/65bd7e2524c06ba3ede91a33/16:9/w_320,h_450,c_limit/undefined 320w, https://media.wired.com/photos/65bd7e2524c06ba3ede91a33/16:9/w_640,h_450,c_limit/undefined 640w” sizes=”100vw” _close=”0″]

EnterpriseThe Trial Over Bitcoin’s True Creator Is in Session

EnterpriseThe Trial Over Bitcoin’s True Creator Is in SessionJoel Khalili

-

[wpcc-element _tag=”source” media=”(max-width: 767px)” srcset=”https://media.wired.com/photos/65c132c9715521915c5949ea/16:9/w_120,h_450,c_limit/undefined 120w, https://media.wired.com/photos/65c132c9715521915c5949ea/16:9/w_240,h_450,c_limit/undefined 240w, https://media.wired.com/photos/65c132c9715521915c5949ea/16:9/w_320,h_450,c_limit/undefined 320w, https://media.wired.com/photos/65c132c9715521915c5949ea/16:9/w_640,h_450,c_limit/undefined 640w” sizes=”100vw” _close=”0″][wpcc-element _tag=”source” media=”(min-width: 768px)” srcset=”https://media.wired.com/photos/65c132c9715521915c5949ea/16:9/w_120,h_450,c_limit/undefined 120w, https://media.wired.com/photos/65c132c9715521915c5949ea/16:9/w_240,h_450,c_limit/undefined 240w, https://media.wired.com/photos/65c132c9715521915c5949ea/16:9/w_320,h_450,c_limit/undefined 320w, https://media.wired.com/photos/65c132c9715521915c5949ea/16:9/w_640,h_450,c_limit/undefined 640w” sizes=”100vw” _close=”0″]

GearTesla Has Misplaced Its Vary Crown to a Chinese language EV You’ve By no means Heard Of

GearTesla Has Misplaced Its Vary Crown to a Chinese language EV You’ve By no means Heard OfAlistair Charlton

-

[wpcc-element _tag=”source” media=”(max-width: 767px)” srcset=”https://media.wired.com/photos/65c11a15b0e2f8703fc83c03/16:9/w_120,h_450,c_limit/undefined 120w, https://media.wired.com/photos/65c11a15b0e2f8703fc83c03/16:9/w_240,h_450,c_limit/undefined 240w, https://media.wired.com/photos/65c11a15b0e2f8703fc83c03/16:9/w_320,h_450,c_limit/undefined 320w, https://media.wired.com/photos/65c11a15b0e2f8703fc83c03/16:9/w_640,h_450,c_limit/undefined 640w” sizes=”100vw” _close=”0″][wpcc-element _tag=”source” media=”(min-width: 768px)” srcset=”https://media.wired.com/photos/65c11a15b0e2f8703fc83c03/16:9/w_120,h_450,c_limit/undefined 120w, https://media.wired.com/photos/65c11a15b0e2f8703fc83c03/16:9/w_240,h_450,c_limit/undefined 240w, https://media.wired.com/photos/65c11a15b0e2f8703fc83c03/16:9/w_320,h_450,c_limit/undefined 320w, https://media.wired.com/photos/65c11a15b0e2f8703fc83c03/16:9/w_640,h_450,c_limit/undefined 640w” sizes=”100vw” _close=”0″]

GearThe Finest Valentine’s Day Intercourse Toy Offers

GearThe Finest Valentine’s Day Intercourse Toy OffersJaina Gray

-

[wpcc-element _tag=”source” media=”(max-width: 767px)” srcset=”https://media.wired.com/photos/65bd689b6430bf70b2a79a6e/16:9/w_120,h_450,c_limit/undefined 120w, https://media.wired.com/photos/65bd689b6430bf70b2a79a6e/16:9/w_240,h_450,c_limit/undefined 240w, https://media.wired.com/photos/65bd689b6430bf70b2a79a6e/16:9/w_320,h_450,c_limit/undefined 320w, https://media.wired.com/photos/65bd689b6430bf70b2a79a6e/16:9/w_640,h_450,c_limit/undefined 640w” sizes=”100vw” _close=”0″][wpcc-element _tag=”source” media=”(min-width: 768px)” srcset=”https://media.wired.com/photos/65bd689b6430bf70b2a79a6e/16:9/w_120,h_450,c_limit/undefined 120w, https://media.wired.com/photos/65bd689b6430bf70b2a79a6e/16:9/w_240,h_450,c_limit/undefined 240w, https://media.wired.com/photos/65bd689b6430bf70b2a79a6e/16:9/w_320,h_450,c_limit/undefined 320w, https://media.wired.com/photos/65bd689b6430bf70b2a79a6e/16:9/w_640,h_450,c_limit/undefined 640w” sizes=”100vw” _close=”0″]

GearThese Are the 14 Android Telephones Value Shopping for

GearThese Are the 14 Android Telephones Value Shopping forJulian Chokkattu

It’s a well-recognized scenario for anybody with an imprecise speedometer. If you realize the machine is rarely off by greater than 5 miles per hour, you may nonetheless keep away from dashing by all the time staying 5 mph under the velocity restrict (as indicated by your untrustworthy speedometer). A notion contract affords an analogous assure of the protection of an imperfect system that depends upon machine studying.

“You don’t want excellent notion,” Carlone mentioned. “You simply need it to be ok in order to not put security in danger.” The group’s greatest contributions, he mentioned, are “introducing your complete thought of notion contracts” and offering the strategies for establishing them. They did this by drawing on strategies from the department of laptop science referred to as formal verification, which gives a mathematical approach of confirming that the habits of a system satisfies a set of necessities.

“Although we don’t know precisely how the neural community does what it does,” Mitra mentioned, they confirmed that it’s nonetheless attainable to show numerically that the uncertainty of a neural community’s output lies inside sure bounds. And if that’s the case, then the system can be protected. “We will then present a statistical assure as as to whether (and to what diploma) a given neural community will really meet these bounds.”

The aerospace firm Sierra Nevada is at present testing these security ensures whereas touchdown a drone on an plane service. This drawback is in some methods extra sophisticated than driving vehicles due to the additional dimension concerned in flying. “In touchdown, there are two essential duties,” mentioned Dragos Margineantu, AI chief technologist at Boeing, “aligning the aircraft with the runway and ensuring the runway is freed from obstacles. Our work with Sayan entails getting ensures for these two features.”

“Simulations utilizing Sayan’s algorithm present that the alignment [of an airplane prior to landing] does enhance,” he mentioned. The subsequent step, deliberate for later this 12 months, is to make use of these programs whereas really touchdown a Boeing experimental airplane. One of many greatest challenges, Margineantu famous, can be determining what we don’t know—“figuring out the uncertainty in our estimates”—and seeing how that impacts security. “Most errors occur after we do issues that we predict we all know—and it seems that we don’t.”

Unique storyreprinted with permission from Quanta Journal, an editorially unbiased publication of theSimons Basiswhose mission is to boost public understanding of science by protecting analysis developments and developments in arithmetic and the bodily and life sciences.